OpenAI Hires a KillSwitch Engineer 😉, while the market explodes with new AI offerings.

We probably need some more nuanced vocabulary. Just saying new AI isn't really getting at whats actually going on...

Welcome to RiD! My side / passion project on all things language AI. It’s basically where you find out today, whats happening tomorrow (hopefully).

I got hold of the latest GPT-4 API through earlier developer access. Yeah it’s ridiculous. With a simple API hook up, I was able to send an article -

of ~1,500 words, and get back a pretty cogent summary in ~1 second and 115 words which was:

'The New York Times seemed to have shifted its editorial direction after the 2016 presidential election, aiming to include a broader range of perspectives. However, during the latter half of Trump’s presidency, the paper narrowed its content and employee roster. Recently, the Times appears to be moving towards increased ideological diversity again, hiring new opinion writers with diverse viewpoints and responding to progressive criticism in a more firm manner. Although institutional change is not always permanent, it is important to recognize progress when it is seen, and the Times is showing improvements in trustworthiness and diversity of opinions.'

Check the lucidity and accuracy for yourself.

Also, we’re finding new kinds of job everywhere these days… OpenAI KillSwitch Engineers needed!!

Here is the TL;DR list of this weeks newsletter:

Text to video arrives in style

Deepfake videos nearing perfection

Information velocity, journalism & facts as misinformation

AI assistance. From in-house chef to “Her”

Text to video arrives

Both RunwayML (pushing aside Midjourney and Stable Diffusion) and other open source versions of text to video have arrived and they are very good.

Beyond the hype and sheer amazingness of this, many people are now constantly banging on about prompt engineering. That will be an anachronism by 2024. People will work out very quickly how to prompt the AI but the AI will also work out very quickly what you (specifically You!) mean by your prompt and iterate with you.

This release is a big step in moving to constant content creation by everyone everywhere all the time and for all manner of purposes. It will drive costs down exponentially and volume but I suspect also creativity up. As always, context and discovery will continue to be the parallel value propositions / opportunity for serving inundated consumers.

Hugging face versions here - https://huggingface.co/spaces/damo-vilab/modelscope-text-to-video-synthesis for those more technically minded and looking to integrate this capability on the back end.

Deep Fakes nearing perfection

The tweet says it all. “Everyone will be able to be anyone soon”. This release adds video synthesis to voice & image synthesis and opens the flood gates for anyone to recreate anyone at will.

Basically what I wrote above applies here also.

Information velocity, journalism & facts as misinformation

“The movement around the advancing field of fact-checking should not seek to consolidate power, but to distribute it”

- https://consilienceproject.org/how-to-mislead-the-facts/

It’s a little off topic but this week I discovered a thread of, not resistance but more, formalized thinking around exactly how society should be responding to all these monumental changes we are living through and how they are really impacting us as individuals and collectively. Forget web3. These groups are looking at how we harness out collective attention through various means rather than how we control the flows.

Personally, I am a complete believer in the idea that reversion to top down hierarchies of information is NOT the solution to misinformation and disinformation. We have evolved into this state of completely distributed constant and exponential information flows and overall that seems like a good thing versus far more centralized control of information. The issue is we haven’t so far been able to harness the technology to process the information so we can all pick up on common threads. Right now it is pulling us all apart and the advent of these AI tools will only accelerate that.

One of the groups looking at this is Common Sense[makers] which is a small collective researching and developing distributed protocols, practices, and tools for collective “sensemaking”. They’re thinking about how we use open protocols to distribute and integrate how we are interacting with information across the digital universe as well as the new approaches to the Stewardship of global collective behavior. (This was a great paper. All pretty theoretical and academic for now but easy reading, if you can pay attention).

Alternately, there is

a global movement for next-generation political economies, committed to advancing plurality, equality, community, and decentralization through upgrading democracy, markets, the data economy, the commons, and identity. So small thing…

So yeah, there are some people out there thinking about how exactly we should organize ourselves in the face of what happening.

AI assistance. From in-house chef to “Her”

Anyway, back to our blissful starry eyed amazement at this new tech which is tearing us apart 😎…

Here are some novel hacks people are using GPT-4. Grab phone, point at fridge, ask for recipe options. Seriously.

Or, this guy who hacked together (and seriously people, it’s not that hard) voice recognition, gpt-3.5 aka chatgpt, text to voice and wrapped in app. Probably roughly over a weekend!

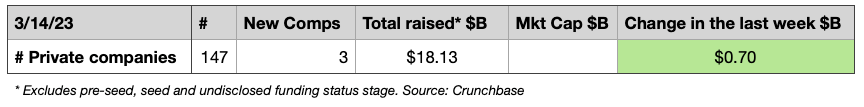

Basic market data tracker:

No change here…

Happenings this week:

Commercial:

Reading all the press on OpenAI, Google etc one can forget other countries and companies are rolling out comparable products. Tel Aviv startup rolls out new advanced AI language model to rival OpenAI.

There's a New Job in Town: Prompt Engineer (to the Tune of $335K/Year)

Apple is reportedly experimenting with language-generating AI

Technical:

Baidu Unveils ERNIE Bot, the Latest Generative AI Mastering Chinese Language

Ethical & other

Nope nothing to see here.

Other media:

Pods:

Freaked Out by AI? We really can prepare for AI

Latest Sam Harris on AI with Lex Fridman, roughly 3hr 23 mins in.